- #如何使用advanced zip password recovery update

- #如何使用advanced zip password recovery full

- #如何使用advanced zip password recovery password

Gradients are averaged across all GPUs in parallel during the backward pass, then synchronously applied before beginning the This paper presents a simple and effective approach to solving the multi-label classification problem. To trade speed with GPU memory, you may pass in -options model. You can find SCNet on there which is a new 2021 Cascaded (multi-stage) model. We report the inference time as the total time of network forwarding and post-processing, excluding the data loading time. with_cp=True to enable checkpoint in backbone.

#如何使用advanced zip password recovery password

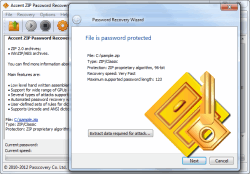

Any of versions efficiently vectorize a password recovery process as on physical processors/cores and GPUs so on distributed workstations. In a four-GPU system, this implementation is about 120 times faster than a single conventional processor, and more than four times faster than a single GPU device (i. In this setup, you have multiple machines (called workers), each with one or several GPUs on them. The gpu-memory consuming for this retina-config looks strange a little bit. Ask questions Can mmdetection train on multiple gpus on Win10? I can train mmdetection on only one gpu. It adds a copy queue on the discrete GPU to move data from system to discrete-local memory. , to support multiple images in each minibatch. Whether you’re in the market for a GPU cluster for deep learning or need to buy GPU accessories, we’ve got you covered. The version will also be saved in trained models. The sofware is optimized for all modern processors, including Intel Core and AMD Ryzen. 005, which was decreased by 10 at 5000 iterations. By using Kaggle, you agree to our use of cookies. iou_threshold (float): IoU threshold for NMS. Convert model from MMSegmentation to TorchServe. We adopt Mask R-CNN as the benchmarking method and conduct experiments on another V100 cluster. 2)Support multiple ready-made frameworks: such as Faster RCNN, etc. Multi-Process Service is a CUDA programming model feature that increases GPU utilization with the concurrent execution of multiple processes on the GPU. We decompose the detection framework into differ-ent components and one can easily construct a customized object detection framework by combining different mod-ules. Much like what happens for single-host training, each available GPU will run one model replica, and the value of the variables of each replica is kept in sync after each batch. 0 line to specify how much of a thread to use on average: Total CPU Threads / Total GPU WU Total = avg_ncpus.

The classification of hyperspectral imagery (HSI) is an important part of HSI applications.Some GPUs are very powerful on their own, but this power can be severely degraded if a user has a suboptimal system setup or if your app is using a suboptimal GPU for a specific task. \ FPS is tested with a single GeForce RTX 2080Ti GPU, using a batch size of 1. NVIDIA NCCL The NVIDIA Collective Communication Library (NCCL) implements multi-GPU and multi-node communication primitives optimized for NVIDIA GPUs and Networking. Tldr GA3C is a gpu accelerated version of asynchronous actor critic. 80(2) where 2 means the performance is mean result of running such setting for 2 time. (default: ``None``) tmpdir (str | None): Temporary directory to save the results of all processes. In a quick test with RandomX-based algorithms we are seeing an improvement in hashrate compared to the previous Project.

#如何使用advanced zip password recovery update

#如何使用advanced zip password recovery full

You get access to the remote server easily via an RDP file take full control Kerod is pure tensorflow 2 implementation of object detection algorithms (Faster R-CNN, DeTr) aiming production. There is some code reproducing the issue attached (bug. However, its high computational complexity confines usage in a time-critical scene. 3s 14 ERROR | Notebook JSON is invalid: Additional properties are not allowed ('execution_count' was unexpected) 998.

0 kommentar(er)

0 kommentar(er)